基于YOLOv8的无人机高空红外(HIT-UAV)检测算法,魔改SimAM注意力助力涨点(一)

1)魔改SimAM注意力,引入切片操作:mAP从原始的0.773提升至0.782)魔改SimAM高效结合卷积:mAP从原始的0.773提升至0.785

💡💡💡本文内容:针对基于YOLOv8的无人机高空红外(HIT-UAV)检测算法进行性能提升,加入各个创新点做验证性试验。

1)魔改SimAM注意力,引入切片操作:mAP从原始的0.773 提升至 0.78

2)魔改SimAM高效结合卷积: mAP从原始的0.773 提升至 0.785

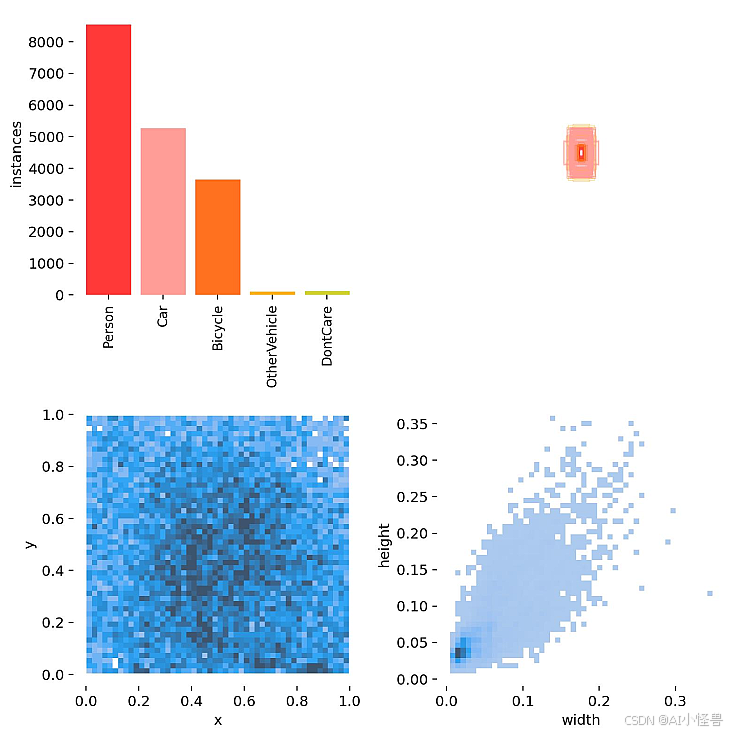

1.无人机高空红外数据集介绍

无人机高空红外检测数据集大小,训练集2008,验证集287,测试集571张,类别

0: Person

1: Car

2: Bicycle

3: OtherVehicle

4: DontCare

细节图如下:

1.1 split_train_val.py

# coding:utf-8

import os

import random

import argparse

parser = argparse.ArgumentParser()

#xml文件的地址,根据自己的数据进行修改 xml一般存放在Annotations下

parser.add_argument('--xml_path', default='Annotations', type=str, help='input xml label path')

#数据集的划分,地址选择自己数据下的ImageSets/Main

parser.add_argument('--txt_path', default='ImageSets/Main', type=str, help='output txt label path')

opt = parser.parse_args()

trainval_percent = 0.9

train_percent = 0.8

xmlfilepath = opt.xml_path

txtsavepath = opt.txt_path

total_xml = os.listdir(xmlfilepath)

if not os.path.exists(txtsavepath):

os.makedirs(txtsavepath)

num = len(total_xml)

list_index = range(num)

tv = int(num * trainval_percent)

tr = int(tv * train_percent)

trainval = random.sample(list_index, tv)

train = random.sample(trainval, tr)

file_trainval = open(txtsavepath + '/trainval.txt', 'w')

file_test = open(txtsavepath + '/test.txt', 'w')

file_train = open(txtsavepath + '/train.txt', 'w')

file_val = open(txtsavepath + '/val.txt', 'w')

for i in list_index:

name = total_xml[i][:-4] + '\n'

if i in trainval:

file_trainval.write(name)

if i in train:

file_train.write(name)

else:

file_val.write(name)

else:

file_test.write(name)

file_trainval.close()

file_train.close()

file_val.close()

file_test.close()1.2 voc_label.py生成适合YOLOv8训练的txt

# -*- coding: utf-8 -*-

import xml.etree.ElementTree as ET

import os

from os import getcwd

sets = ['train', 'val', 'test']

classes = ["Person","Car","Bicycle","OtherVehicle","DontCare"] # 改成自己的类别

abs_path = os.getcwd()

print(abs_path)

def convert(size, box):

dw = 1. / (size[0])

dh = 1. / (size[1])

x = (box[0] + box[1]) / 2.0 - 1

y = (box[2] + box[3]) / 2.0 - 1

w = box[1] - box[0]

h = box[3] - box[2]

x = x * dw

w = w * dw

y = y * dh

h = h * dh

return x, y, w, h

def convert_annotation(image_id):

in_file = open('Annotations/%s.xml' % (image_id), encoding='UTF-8')

out_file = open('labels/%s.txt' % (image_id), 'w')

tree = ET.parse(in_file)

root = tree.getroot()

size = root.find('size')

w = int(size.find('width').text)

h = int(size.find('height').text)

for obj in root.iter('object'):

difficult = obj.find('difficult').text

#difficult = obj.find('Difficult').text

cls = obj.find('name').text

if cls not in classes or int(difficult) == 1:

continue

cls_id = classes.index(cls)

xmlbox = obj.find('bndbox')

b = (float(xmlbox.find('xmin').text), float(xmlbox.find('xmax').text), float(xmlbox.find('ymin').text),

float(xmlbox.find('ymax').text))

b1, b2, b3, b4 = b

# 标注越界修正

if b2 > w:

b2 = w

if b4 > h:

b4 = h

b = (b1, b2, b3, b4)

bb = convert((w, h), b)

out_file.write(str(cls_id) + " " + " ".join([str(a) for a in bb]) + '\n')

wd = getcwd()

for image_set in sets:

if not os.path.exists('labels/'):

os.makedirs('labels/')

image_ids = open('ImageSets/Main/%s.txt' % (image_set)).read().strip().split()

list_file = open('%s.txt' % (image_set), 'w')

for image_id in image_ids:

list_file.write(abs_path + '/images/%s.png\n' % (image_id))

convert_annotation(image_id)

list_file.close()2.基于YOLOv8的无人机高空红外识别

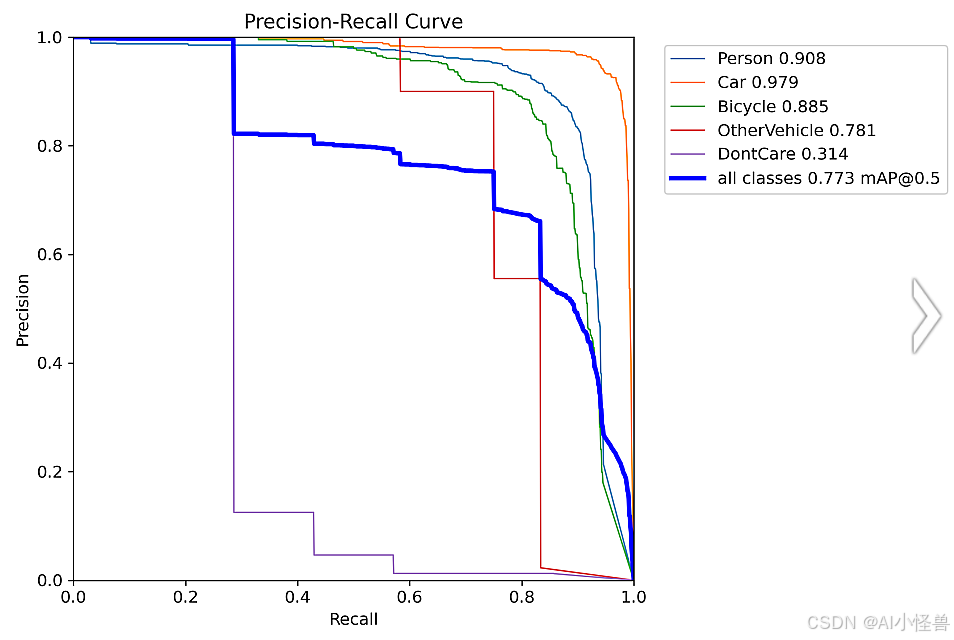

2.1 原始结果

原始mAP为0.773

YOLOv8n summary (fused): 168 layers, 3006623 parameters, 0 gradients, 8.1 GFLOPs

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 9/9 [00:07<00:00, 1.13it/s]

all 287 2460 0.818 0.707 0.773 0.525

Person 287 1168 0.933 0.799 0.908 0.495

Car 287 719 0.942 0.945 0.979 0.743

Bicycle 287 554 0.911 0.756 0.885 0.513

OtherVehicle 287 12 0.865 0.75 0.781 0.722

DontCare 287 7 0.439 0.286 0.314 0.152

2.2 具有切片操作的SimAM注意力,魔改SimAM

原文链接:YOLOv8全网首发:注意力独家魔改 | 具有切片操作的SimAM注意力,魔改SimAM助力小目标检测-CSDN博客

💡💡💡本文创新:魔改SimAM注意力,引入切片操作,增强小目标特征提取能力

💡💡💡问题点:SimAM计算整张特征图的像素差平均值时加权可能会忽略小目标的重要性,同时与整体平均值相比可能和背景信息相似,导致加权增强较弱,进而使得SimAM对小目标的增强能力较差。

💡💡💡本文解决对策:引入了切片操作,当特征图被切成不同的块后,大目标由于其纹理特征明显会影响所在块的平均值,导致其获得的额外加权减少,而合并特征图后,大目标依然可以保持高可识别度甚至获得进一步增强;而小目标的特征与局部平均值差距更大,从而获得更多加权,小目标特征得到增强,即sws模块保证了大、小目标都获得了公正的关注和增强。

💡💡💡如何创新到YOLOv8

1)直接作为注意力使用,效果秒杀SimAM

魔改SimAM注意力 mAP为0.78

YOLOv8n-SWS summary (fused): 170 layers, 3006623 parameters, 0 gradients, 8.1 GFLOPs

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 9/9 [00:07<00:00, 1.15it/s]

all 287 2460 0.81 0.746 0.78 0.499

Person 287 1168 0.88 0.864 0.909 0.49

Car 287 719 0.927 0.961 0.979 0.738

Bicycle 287 554 0.852 0.836 0.892 0.521

OtherVehicle 287 12 0.793 0.641 0.686 0.6

DontCare 287 7 0.6 0.429 0.434 0.145

2)高效和卷积结合,代替原始网络的卷积操作;

高效和卷积结合 mAP为0.785

YOLOv8n-Conv_SWS summary (fused): 192 layers, 3007295 parameters, 0 gradients, 8.1 GFLOPs

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 9/9 [00:07<00:00, 1.15it/s]

all 287 2460 0.82 0.721 0.785 0.522

Person 287 1168 0.895 0.849 0.911 0.491

Car 287 719 0.931 0.947 0.98 0.739

Bicycle 287 554 0.854 0.818 0.885 0.523

OtherVehicle 287 12 0.894 0.705 0.814 0.681

DontCare 287 7 0.526 0.286 0.334 0.178

3.系列篇

3) 一种基于YOLOv8的高精度无人机高空红外检测算法(原创自研)

关注下方名片点击关注,源码获取途径。

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)