Dubbo连接数过多?全方位排查与优化指南

/ 问题发生时的错误日志。

·

深入解析Dubbo连接数暴增问题,从根本原因到解决方案的完整实战手册

文章目录

引言

想象一下,你的微服务系统就像一家热门餐厅 🍽️。突然某天,顾客(服务请求)爆满,所有的餐桌(连接)都被占满,新来的顾客只能在门口排队等待,整个系统陷入瘫痪…这就是Dubbo连接数过多的典型场景!

在微服务架构中,连接数管理直接影响系统的稳定性和性能。本文将带你从问题现象到根本解决,全面掌握Dubbo连接数优化的完整方案。

一、什么是连接数问题?为什么如此重要? 🤔

1.1 从一个真实案例说起

某电商平台在"双11"大促期间遭遇了这样的问题:

// 问题发生时的错误日志

Caused by: java.net.BindException: Address already in use

Caused by: org.apache.dubbo.remoting.RemotingException: Connection limit exceeded

Caused by: java.util.concurrent.TimeoutException: Waiting connection creation timeout

系统表现:

- 📈 服务响应时间从50ms飙升到5000ms

- 🔴 错误率从0.1%暴涨到30%

- 💀 部分服务实例完全不可用

- 🔥 CPU使用率100%,系统卡死

1.2 连接数的基本概念

在Dubbo中,连接是服务消费者与提供者之间的通信通道:

关键指标:

- 活动连接数:当前正在使用的连接数量

- 最大连接数:系统允许的最大连接限制

- 连接等待队列:等待获取连接的请求数量

1.3 连接数过多的危害

| 问题类型 | 短期影响 | 长期影响 |

|---|---|---|

| 性能下降 | 响应时间增加 | 用户体验恶化 |

| 系统不稳定 | 偶发性故障 | 服务不可用 |

| 资源耗尽 | CPU/内存飙升 | 系统崩溃 |

| 业务损失 | 请求失败 | 收入损失 |

二、连接数问题的根本原因分析 🔍

2.1 配置不当导致的连接泄露

2.1.1 连接未正确关闭

// 错误的用法 - 连接未关闭

public void wrongUsage() {

try {

ReferenceConfig<DemoService> reference = new ReferenceConfig<>();

reference.setInterface(DemoService.class);

DemoService service = reference.get();

service.invokeMethod(); // 使用服务

// 忘记调用 reference.destroy() !!!

} catch (Exception e) {

e.printStackTrace();

}

}

// 正确的用法 - 确保连接关闭

public void correctUsage() {

ReferenceConfig<DemoService> reference = null;

try {

reference = new ReferenceConfig<>();

reference.setInterface(DemoService.class);

DemoService service = reference.get();

service.invokeMethod();

} catch (Exception e) {

e.printStackTrace();

} finally {

if (reference != null) {

reference.destroy(); // 重要:释放连接

}

}

}

2.1.2 连接池配置不合理

# 有问题的配置

dubbo:

protocol:

name: dubbo

port: 20880

consumer:

connections: 1000 # 连接数设置过大

timeout: 1000

retries: 5

# 优化后的配置

dubbo:

protocol:

name: dubbo

port: 20880

dispatcher: message

threadpool: fixed

threads: 200

iothreads: 4

consumer:

connections: 100 # 合理设置连接数

timeout: 3000

retries: 2

check: false

2.2 资源竞争与死锁

2.2.1 线程死锁导致连接无法释放

@Component

public class ProblematicService {

private final Object lock1 = new Object();

private final Object lock2 = new Object();

@Reference

private ServiceA serviceA;

@Reference

private ServiceB serviceB;

// 可能产生死锁的方法

public void problematicMethod() {

synchronized (lock1) {

serviceA.doSomething(); // 持有lock1,等待ServiceA响应

synchronized (lock2) { // 可能被其他线程持有

serviceB.doSomething();

}

}

}

// 另一个可能产生死锁的方法

public void anotherProblematicMethod() {

synchronized (lock2) {

serviceB.doSomething(); // 持有lock2,等待ServiceB响应

synchronized (lock1) { // 可能被problematicMethod持有

serviceA.doSomething();

}

}

}

}

2.2.2 数据库连接池连带影响

# 数据库连接池配置影响Dubbo连接

spring:

datasource:

hikari:

maximum-pool-size: 200 # 数据库连接池过大

connection-timeout: 30000

max-lifetime: 600000

# Dubbo连接配置

dubbo:

consumer:

connections: 100

timeout: 5000

2.3 业务逻辑缺陷

2.3.1 循环调用问题

@Service

public class OrderServiceImpl implements OrderService {

@Reference

private UserService userService;

@Reference

private InventoryService inventoryService;

// 有问题的循环调用

public Order createOrder(OrderRequest request) {

// 调用用户服务

User user = userService.getUser(request.getUserId());

// 用户服务中可能又调用了订单服务

userService.updateUserOrderCount(request.getUserId());

// 调用库存服务

inventoryService.deductStock(request.getProductId(), request.getQuantity());

return processOrder(request, user);

}

}

2.3.2 大对象传输导致连接占用时间过长

// 有问题的接口设计 - 传输大对象

public interface DataService {

// 一次性返回大量数据

List<BigDataObject> getLargeData(int page, int size); // 可能返回数MB数据

// 长时间处理的方法

String processLongTimeOperation(String data); // 可能执行几分钟

}

// 优化后的接口设计

public interface OptimizedDataService {

// 分页获取数据

PageResult<BigDataObject> getPagedData(PageRequest request);

// 异步处理长时间操作

CompletableFuture<String> processAsync(String data);

// 流式传输大数据

Stream<BigDataObject> getStreamData(Criteria criteria);

}

三、连接数监控与诊断工具 📊

3.1 Dubbo内置监控指标

3.1.1 关键监控指标

// 连接数监控指标示例

public class ConnectionMetrics {

// 当前活跃连接数

private int activeConnections;

// 最大连接数限制

private int maxConnections;

// 连接等待数量

private int connectionWaitCount;

// 连接创建失败次数

private int connectionCreateFailures;

// 连接平均等待时间

private long averageWaitTime;

// 连接使用率

private double connectionUtilization;

}

3.1.2 监控配置

# 开启Dubbo监控

dubbo:

monitor:

protocol: registry

address: ${MONITOR_SERVER:127.0.0.1:7070}

metrics:

enable: true

protocol: prometheus

port: 9090

application:

qos-enable: true # 开启QOS

qos-port: 22222 # QOS端口

3.2 使用QOS实时诊断

3.2.1 QOS命令查询连接状态

# 连接到Dubbo QOS

telnet 127.0.0.1 22222

# 查看服务状态

ls

# 显示:

# PROVIDER:

# com.example.UserService

# CONSUMER:

# com.example.OrderService

# 查看连接信息

cd com.example.UserService

ls

# 显示连接详情

# 查看线程池状态

count com.example.UserService

3.2.2 实时监控脚本

#!/bin/bash

# connection_monitor.sh

DUBBO_HOST="127.0.0.1"

DUBBO_PORT="22222"

ALERT_THRESHOLD=80

while true; do

# 获取连接数信息

CONNECTION_INFO=$(echo "count com.example.UserService" | nc $DUBBO_HOST $DUBBO_PORT)

# 解析连接数

ACTIVE_CONNECTIONS=$(echo "$CONNECTION_INFO" | grep "active" | awk '{print $3}')

MAX_CONNECTIONS=$(echo "$CONNECTION_INFO" | grep "max" | awk '{print $3}')

# 计算使用率

UTILIZATION=$((ACTIVE_CONNECTIONS * 100 / MAX_CONNECTIONS))

echo "$(date): Connection utilization: $UTILIZATION%"

# 告警逻辑

if [ $UTILIZATION -gt $ALERT_THRESHOLD ]; then

echo "ALERT: Connection usage exceeded threshold!"

# 发送告警通知

send_alert "Dubbo connection usage: $UTILIZATION%"

fi

sleep 30

done

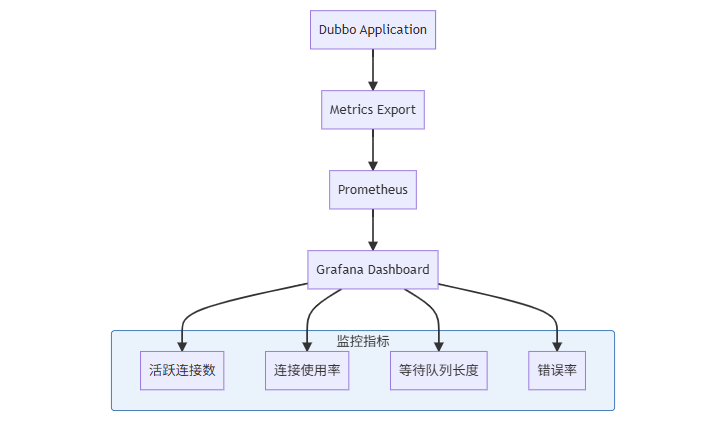

3.3 可视化监控面板

3.3.1 Prometheus + Grafana配置

# prometheus.yml 配置

scrape_configs:

- job_name: 'dubbo'

static_configs:

- targets: ['dubbo-app:9090']

metrics_path: '/metrics'

scrape_interval: 15s

# Dubbo指标规则

groups:

- name: dubbo_connection_alerts

rules:

- alert: HighConnectionUsage

expr: dubbo_connection_usage_percent > 80

for: 5m

labels:

severity: warning

annotations:

summary: "Dubbo connection usage is high"

description: "Connection usage is at {{ $value }}%"

3.3.2 关键监控图表

四、连接数优化实战方案 🛠️

4.1 连接池优化配置

4.1.1 合理设置连接数参数

# 优化的Dubbo配置

dubbo:

application:

name: optimized-service

protocol:

name: dubbo

port: 20880

dispatcher: message

threadpool: cached

threads: 500

iothreads: 8

queues: 0

accepts: 1000

consumer:

check: false

connections: 50 # 每个服务连接数

timeout: 3000

retries: 2

loadbalance: leastactive

async: false

provider:

threads: 200

iothreads: 4

dispatcher: message

accepts: 1000

payload: 8388608 # 8MB最大请求大小

4.1.2 连接池高级参数调优

@Configuration

public class DubboConnectionConfig {

@Bean

public ApplicationConfig applicationConfig() {

ApplicationConfig config = new ApplicationConfig();

config.setName("connection-optimized-app");

config.setQosEnable(true);

config.setQosPort(22222);

config.setQosAcceptForeignIp(false);

return config;

}

@Bean

public ProtocolConfig protocolConfig() {

ProtocolConfig config = new ProtocolConfig();

config.setName("dubbo");

config.setPort(20880);

config.setThreadpool("cached");

config.setThreads(500);

config.setQueues(0);

config.setAccepts(1000);

config.setPayload(8388608);

return config;

}

@Bean

public ReferenceConfig<UserService> userServiceReference() {

ReferenceConfig<UserService> reference = new ReferenceConfig<>();

reference.setInterface(UserService.class);

reference.setConnections(50); // 限制连接数

reference.setTimeout(3000);

reference.setRetries(2);

reference.setCheck(false);

reference.setLoadbalance("leastactive");

return reference;

}

}

4.2 连接复用与资源管理

4.2.1 连接复用最佳实践

@Service

public class ConnectionOptimizedService {

// 使用单例ReferenceConfig,避免重复创建

private final ReferenceConfig<OrderService> orderServiceReference;

private volatile OrderService orderService;

public ConnectionOptimizedService() {

this.orderServiceReference = createOrderServiceReference();

}

private ReferenceConfig<OrderService> createOrderServiceReference() {

ReferenceConfig<OrderService> reference = new ReferenceConfig<>();

reference.setInterface(OrderService.class);

reference.setConnections(10); // 限制连接数

reference.setTimeout(2000);

reference.setRetries(1);

reference.setCheck(false);

return reference;

}

public OrderService getOrderService() {

if (orderService == null) {

synchronized (this) {

if (orderService == null) {

orderService = orderServiceReference.get();

}

}

}

return orderService;

}

@PreDestroy

public void destroy() {

if (orderServiceReference != null) {

orderServiceReference.destroy(); // 应用关闭时释放连接

}

}

// 业务方法 - 复用连接

public void processOrder(OrderRequest request) {

OrderService service = getOrderService();

try {

service.createOrder(request);

} catch (Exception e) {

handleServiceException(e, request);

}

}

}

4.2.2 连接泄漏检测与防护

@Component

public class ConnectionLeakDetector {

private final Map<String, ConnectionStats> connectionStats = new ConcurrentHashMap<>();

private final ScheduledExecutorService scheduler = Executors.newScheduledThreadPool(1);

@PostConstruct

public void init() {

// 定期检测连接泄漏

scheduler.scheduleAtFixedRate(this::detectLeaks, 5, 5, TimeUnit.MINUTES);

}

public void recordConnectionCreation(String serviceName) {

ConnectionStats stats = connectionStats.computeIfAbsent(serviceName,

k -> new ConnectionStats());

stats.incrementCreated();

}

public void recordConnectionDestruction(String serviceName) {

ConnectionStats stats = connectionStats.get(serviceName);

if (stats != null) {

stats.incrementDestroyed();

}

}

private void detectLeaks() {

for (Map.Entry<String, ConnectionStats> entry : connectionStats.entrySet()) {

String serviceName = entry.getKey();

ConnectionStats stats = entry.getValue();

long leaked = stats.getCreatedCount() - stats.getDestroyedCount();

if (leaked > 10) { // 泄漏阈值

logger.warn("Potential connection leak detected for {}: {} connections leaked",

serviceName, leaked);

// 触发告警或自动修复

handleConnectionLeak(serviceName, leaked);

}

}

}

private static class ConnectionStats {

private final AtomicLong created = new AtomicLong();

private final AtomicLong destroyed = new AtomicLong();

void incrementCreated() { created.incrementAndGet(); }

void incrementDestroyed() { destroyed.incrementAndGet(); }

long getCreatedCount() { return created.get(); }

long getDestroyedCount() { return destroyed.get(); }

}

}

4.3 异步化与流量控制

4.3.1 异步调用优化

@Service

public class AsyncOptimizedService {

@Reference(

timeout = 5000,

connections = 20,

loadbalance = "leastactive"

)

private UserService userService;

@Reference(

timeout = 3000,

connections = 15,

async = true // 开启异步调用

)

private OrderService orderService;

// 同步调用 - 适用于简单查询

public User getUserSync(Long userId) {

return userService.getUserById(userId);

}

// 异步调用 - 适用于复杂操作

public CompletableFuture<Order> createOrderAsync(OrderRequest request) {

CompletableFuture<Order> future = new CompletableFuture<>();

RpcContext.getContext().asyncCall(() -> {

try {

Order order = orderService.createOrder(request);

future.complete(order);

} catch (Exception e) {

future.completeExceptionally(e);

}

return null;

});

return future;

}

// 批量异步处理

public CompletableFuture<List<User>> batchGetUsersAsync(List<Long> userIds) {

List<CompletableFuture<User>> futures = userIds.stream()

.map(userId -> CompletableFuture.supplyAsync(() -> userService.getUserById(userId)))

.collect(Collectors.toList());

return CompletableFuture.allOf(futures.toArray(new CompletableFuture[0]))

.thenApply(v -> futures.stream()

.map(CompletableFuture::join)

.collect(Collectors.toList()));

}

}

4.3.2 限流与熔断配置

# 集成Sentinel进行流量控制

dubbo:

application:

name: flow-controlled-service

registry:

address: nacos://127.0.0.1:8848

consumer:

filter: sentinel

connections: 30

timeout: 2000

provider:

filter: sentinel

# Sentinel规则配置

spring:

cloud:

sentinel:

transport:

dashboard: localhost:8080

dubbo:

provider:

enabled: true

consumer:

enabled: true

# 限流规则

sentinel:

rules:

flow:

- resource: com.example.UserService:getUserById(java.lang.Long)

count: 100

grade: 1 # QPS限流

strategy: 0

degrade:

- resource: com.example.OrderService:createOrder(com.example.OrderRequest)

count: 5000

timeWindow: 10

grade: 0 # 响应时间熔断

五、应急处理与故障恢复 🚨

5.1 连接数暴增的紧急处理

5.1.1 快速诊断命令

#!/bin/bash

# emergency_diagnosis.sh

echo "=== Dubbo Connection Emergency Diagnosis ==="

# 1. 检查系统连接数

echo "1. System-wide connections:"

netstat -an | grep 20880 | wc -l

# 2. 检查Dubbo QOS状态

echo "2. Dubbo QOS status:"

echo "ls" | nc 127.0.0.1 22222

# 3. 检查Java进程线程数

echo "3. Java thread count:"

jstack <PID> | grep "dubbo" | wc -l

# 4. 检查内存使用

echo "4. Memory usage:"

jstat -gc <PID>

# 5. 快速重启单个服务实例

echo "5. Restarting problematic instance..."

# 实现重启逻辑

5.1.2 紧急限流措施

@Component

public class EmergencyFlowControl {

private final AtomicBoolean emergencyMode = new AtomicBoolean(false);

private final RateLimiter emergencyLimiter = RateLimiter.create(10.0); // 10 QPS

public <T> T executeWithEmergencyControl(Supplier<T> supplier, String operation) {

if (emergencyMode.get() && !emergencyLimiter.tryAcquire()) {

throw new ServiceDegradationException("Service in emergency mode, please try again later");

}

try {

return supplier.get();

} catch (Exception e) {

if (e instanceof RemotingException) {

// 触发紧急模式

enableEmergencyMode();

}

throw e;

}

}

private void enableEmergencyMode() {

if (emergencyMode.compareAndSet(false, true)) {

logger.warn("Emergency mode activated due to connection issues");

// 发送告警通知

notifyEmergencyTeam();

// 30分钟后自动关闭紧急模式

ScheduledExecutorService scheduler = Executors.newSingleThreadScheduledExecutor();

scheduler.schedule(this::disableEmergencyMode, 30, TimeUnit.MINUTES);

}

}

private void disableEmergencyMode() {

emergencyMode.set(false);

logger.info("Emergency mode deactivated");

}

}

5.2 连接池自动修复

5.2.1 连接池健康检查

@Component

public class ConnectionPoolHealthChecker {

private final ScheduledExecutorService healthChecker =

Executors.newSingleThreadScheduledExecutor();

@Reference(check = false)

private MonitorService monitorService;

@PostConstruct

public void startHealthCheck() {

healthChecker.scheduleAtFixedRate(this::checkConnectionHealth, 1, 1, TimeUnit.MINUTES);

}

private void checkConnectionHealth() {

try {

Map<String, ConnectionMetrics> metrics = monitorService.getConnectionMetrics();

for (Map.Entry<String, ConnectionMetrics> entry : metrics.entrySet()) {

String serviceName = entry.getKey();

ConnectionMetrics metric = entry.getValue();

if (metric.getUtilization() > 90) {

logger.warn("High connection utilization for {}: {}%",

serviceName, metric.getUtilization());

triggerConnectionPoolOptimization(serviceName);

}

if (metric.getWaitCount() > 100) {

logger.error("Connection pool exhausted for {}: {} requests waiting",

serviceName, metric.getWaitCount());

triggerEmergencyScaling(serviceName);

}

}

} catch (Exception e) {

logger.error("Connection health check failed", e);

}

}

private void triggerConnectionPoolOptimization(String serviceName) {

// 动态调整连接池参数

logger.info("Optimizing connection pool for: {}", serviceName);

// 实现动态调整逻辑

}

private void triggerEmergencyScaling(String serviceName) {

// 触发紧急扩容

logger.info("Triggering emergency scaling for: {}", serviceName);

// 实现自动扩容逻辑

}

}

六、预防措施与最佳实践 🛡️

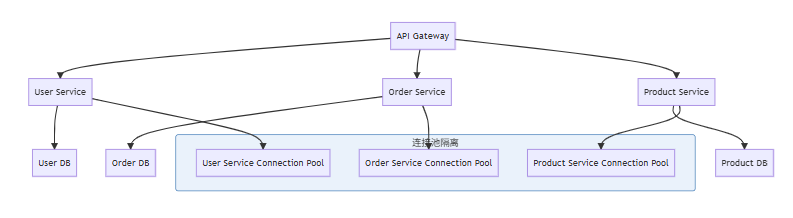

6.1 架构层面的预防措施

6.1.1 微服务拆分与治理

6.1.2 服务分级与隔离

# 基于重要性的连接池配置

dubbo:

references:

critical-service:

interface: com.example.PaymentService

connections: 100

timeout: 5000

retries: 3

important-service:

interface: com.example.OrderService

connections: 50

timeout: 3000

retries: 2

normal-service:

interface: com.example.NotificationService

connections: 20

timeout: 10000

retries: 1

async: true

6.2 开发规范与代码审查

6.2.1 连接使用规范

/**

* Dubbo连接使用规范示例

*/

public class DubboConnectionStandards {

/**

* 标准1: 使用try-with-resources或确保finally中释放连接

*/

public void standardUsage1() {

ReferenceConfig<DemoService> reference = null;

try {

reference = new ReferenceConfig<>();

reference.setInterface(DemoService.class);

DemoService service = reference.get();

// 业务逻辑

} finally {

if (reference != null) {

reference.destroy();

}

}

}

/**

* 标准2: 使用连接池,避免频繁创建销毁

*/

@Component

public static class ConnectionPoolUser {

private final ReferenceConfig<DemoService> reference;

public ConnectionPoolUser() {

this.reference = createReference();

}

public void businessMethod() {

DemoService service = reference.get();

// 复用连接

}

@PreDestroy

public void destroy() {

reference.destroy();

}

}

/**

* 标准3: 设置合理的超时和重试策略

*/

@Reference(

timeout = 3000,

retries = 2,

connections = 10

)

private DemoService properlyConfiguredService;

}

6.2.2 代码审查清单

| 检查项 | 通过标准 | 检查方法 |

|---|---|---|

| 连接释放 | 所有ReferenceConfig都有destroy调用 | 代码审查+静态分析 |

| 连接池配置 | 连接数设置合理 | 配置检查 |

| 超时设置 | 超时时间合理,避免长时间占用 | 配置检查 |

| 异常处理 | 连接异常有妥善处理 | 代码审查 |

| 资源清理 | @PreDestroy正确使用 | 代码审查 |

七、总结 📚

通过本文的全面解析,我们掌握了Dubbo连接数管理的完整知识体系:

7.1 核心要点回顾

✅ 问题识别:快速诊断连接数过多的症状和影响

✅ 原因分析:深入理解配置、代码、架构层面的根本原因

✅ 监控诊断:建立全方位的连接数监控体系

✅ 优化方案:连接池调优、异步化、限流等实战方案

✅ 应急处理:连接数暴增时的紧急应对措施

✅ 预防措施:架构规范和开发最佳实践

7.2 连接数优化路线图

| 阶段 | 重点工作 | 目标效果 |

|---|---|---|

| 基础优化 | 合理配置连接池参数 | 连接数稳定,无泄漏 |

| 高级优化 | 异步化改造、限流熔断 | 高并发下稳定性提升 |

| 架构优化 | 服务治理、资源隔离 | 系统整体弹性增强 |

| 智能运维 | 自动扩缩容、智能调优 | 无人值守的稳定性 |

7.3 关键成功因素

- 监控先行:没有监控就没有优化

- 渐进式优化:从小范围试点到全面推广

- 全链路思维:考虑上下游服务的相互影响

- 自动化运维:减少人工干预,提高响应速度

🎯 核心认知:连接数管理不是一次性的任务,而是需要持续优化的系统工程。建立完善的监控、预警、处理机制,才能在问题发生前预防,在问题发生时快速解决。

参考资料 📖

最佳实践提示:连接数优化是一个持续的过程,建议建立定期的连接数健康检查机制,结合业务发展趋势进行容量规划,防患于未然。

标签: Dubbo 连接数优化 性能调优 微服务 连接池 故障处理

更多推荐

已为社区贡献20条内容

已为社区贡献20条内容

所有评论(0)